🏋🏼 Heavy tails I: extremes events and randomness

November 2023The famous Pareto principle states that, when many independent sources contribute to a quantitative phenomenon, roughly 80% of the total originates from 20% of the sources: 80% of the wealth is owned by (less than) 20% of the people, you wear the same 20% of your wardrobe 80% of the time, 20% of your efforts are responsible for 80% of your grades, 80% of your website traffic comes from 20% of your content, etc.

This phenomenon mostly comes from a severe imbalance of the underlying probability distribution: for each sample, there is a not-so-small probability of this sample being unusually large. This is what we call heavy tails. In this post, we'll give a mathematical definition, a few examples, and show how they lead to Pareto-like principles.

The tail distribution of a random variable

If is a random number, the tail of its distribution is the probability of taking large values: . Of course, this function is decreasing in ; the question is, how fast?

Light tails

Some distributions from classical probability have tails which decrease quickly toward zero. It is the case for Gaussian random variables: a classical equivalent shows that if , then when is very large[1]

This probability is overwhelmingly small. For example, is already smaller than . It means that if you draw samples from the probability of having one of those samples greater than is approximately . That's possible, but very rare.

Heavy tails

A distribution is heavy-tailed when does not decay as fast as or even , but rather like inverses of polynomials: or for example. That's the case with the ratio of two standard Gaussian variables , whose density is . For this distribution called the Cauchy distribution[2], a direct computation gives

This decays very slowly. For example , which means that if you draw as few as 100 samples from then you will see one of the samples larger than 5 with probability . That's a very different behaviour than the preceding Gaussian example.

Mathematically, we say that a distribution is heavy-tailed if is asymptotically comparable to for some . By "asymptotically comparable", we mean that terms like should not count. There is a class of functions, called slowly varying, encompassing this: they are all the functions which are essentially somewhere between constant and logarithmic. Just forget about this technical point: for the rest of the note, think of "regularly varying" as "almost constant". I will keep this denomination.

The same definition holds for .

Densities

If has a density , then one can generally see the heavy-tail of in the asymptotics of . Roughly speaking, if for example when is large, then , so is heavy-tailed with index . Most of our following examples will have densities.

Log-scales

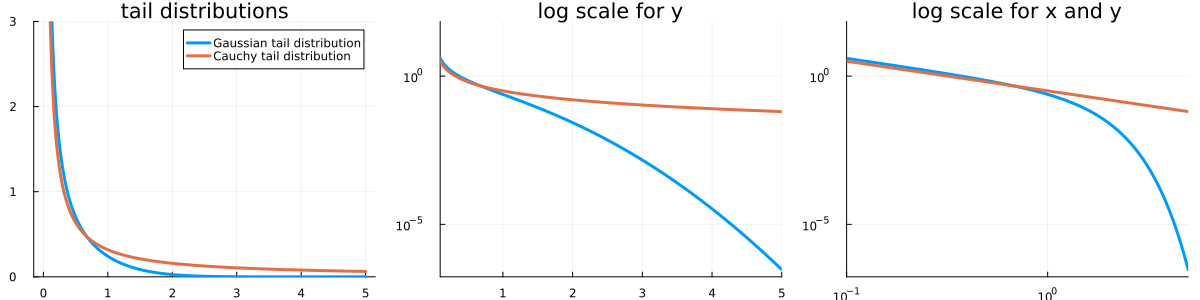

On the left, you see the two densities mentioned above: Gaussian and Cauchy. On classical plots like this, it is almost impossible to see if something is heavy-tailed or not. The orange curve seems to go to zero slower than the blue one, but at which rate? This is why, when it comes to heavy tails, log-scales on plots are ubiquitous. The plot on the middle is the same as the one on the left, but with a log-scale on the y-axis; and on the right, both axes have a log-scale.

In fact, if has a density , then discerning by bare visual inspection if decays to zero rather polynomially or exponentially is almost impossible. But on a log-log scale,

if we have : on a log-log plot, this is a linear relation ().

while if is (say) exponential, , then . On a log-log plot, this is an exponential relation ().

This is why most plots you will see on this topic are on log-scales or log-log scales.

Examples of heavy-tailed densities

The Power-Law, or Pareto distribution

The most basic and important example of a heavy-tailed distribution is the Pareto one. It is directly parametrized by its index and its density is given by

I started the distribution at 1, but some people add its starting point as a second parameter. It's really not important. The Pareto law is often denoted .

Other heavy-tailed densities

The Fréchet distribution has CDF and PDF

Closely related is the Inverse Gamma distribution,with

When , this is often called a Lévy distribution. It is a special case of the -stable distributions, which encompasses the Cauchy distribution. There is also the Burr distribution (of type XII), with density

A shortlist of mechanisms leading to heavy tails.

There are many survey papers on why heavy tails do appear in the real world, like Newman's one. In general, the most ubiquitous cases are the following ones:

simple transforms (like inverses or exponentials) of distributions. For example, the inverse of a uniform random variable has a heavy tail with index .

Maxima of many independent random variables often converge (after proper normalization) towards heavy-tailed distributions. This is called the Fisher-Tippett-Gnedenko theorem. This is why many areas in applied statistics (insurance, lifetime estimation, flood predictions, etc) have to deal with heavy tailed distributions.

Any dynamic phenomenon where an growth rate is independent of the size leads to sizes which are exponentials of classical random variables. In economics, this is called Gibrat's law.

Random recursions of the form often converge towards heavy-tailed distributions. This is a very deep result by Harry Kesten and I wrote a note on this topic.

The number of connections of nodes in a large network often follows a heavy-tailed distribution. This is notably the case for the Albert-Barabasi preferential attachment model.

Zipf's law is probably the most famous example. It says that the probability of a word appearing in a text is inversely proportional to its use rank: for example, the second most-used word ("of") is two times less frequent than the first one ("the").

The Lorenz curve of heavy-tailed distributions

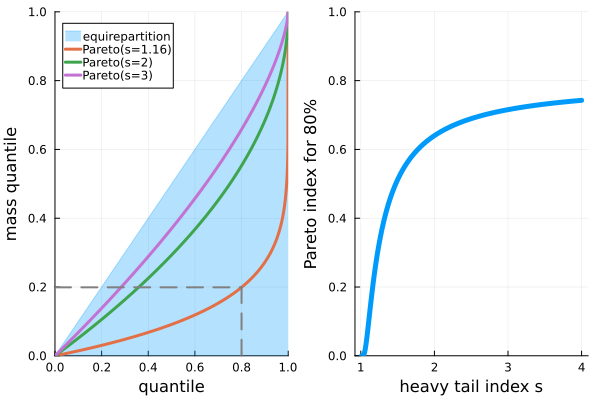

Now, let us see how heavy tails are the kind of distributions accountable for imbalances like the 80-20 principle. In general, we measure such imbalances using the Lorenz curve: this curves gives the amount of mass "produced" by the -th quantile of a probability distribution. By "mass", we mean the mathematical expectation. The correct definition of the Lorenz curve is the curve joining all points for all , where is the proportion of samples below level , and

is the proportion of the total mean coming from samples below . This is the same curve as where is the quantile function.

For Pareto distributions, we have hence . On the other hand and

so that . A mere computation gives the following picture.

Mass imbalance in heavy-tails.

The Lorenz curve of a is given by .

The quantile contributing the last 80% of the total mass is given by . I call this quantile the "Pareto Index" in the next picture below.

Pareto's "80-20 principle" corresponds to .

As shown with the dotted lines, the tail-index which seems to fit the 80-20 principle is . For this index (as for any index ), the Pareto distribution has a finite mean but no finite variance. For general heavy-tailed distributions, we would have the same kind of pictures, with really convex Lorenz curves. Of course, since the distribution becomes more and more heavy-tailed when is closer and closer to 1, these curves are less convex when increases.

In general, estimating the heavy-tail index is a difficult task. I have a note on this topic where I describe the Hill estimator.

References

The manual Fundamentals of heavy tails is simply the best book out there on heavy tails.

The book Fooled by Randomness, written by the unsufferable Nassim Nicholas Taleb, is a very good book on maths, stats, life, risk, finance, and randomness. As always with Taleb, shining pieces of wisdom are mixed with bad faith and purely idiotic stupidities – such books are the best books.

This paper by M. Newman is a gold mine for heavy-tail examples.

There's a self-improvement book on the 80/20 principle. Look at the cover: "the secret to achieving more with less", "the 80/20 principle is the cornerstone of results-based living", etc. These "books" are the equivalent of astrology or healing with stones, but marketed for overachieving young graduates starting a consulting carreer at McKinsey.

[1] This estimate is very precise: the error is of order , so as long as is greater than it is smaller than .

[2] Indeed, and when is close to zero .